Sam Altman, the CEO of OpenAI, admitted that the hallucination rate of the latest AI model, GPT-4o, has worsened compared to its predecessor. He promised significant improvements in this issue in the next generation model. Altman expressed concern over the psychological effects of AI on users, stating that alongside technical mitigation measures, privacy and mental health protection plans will be implemented.

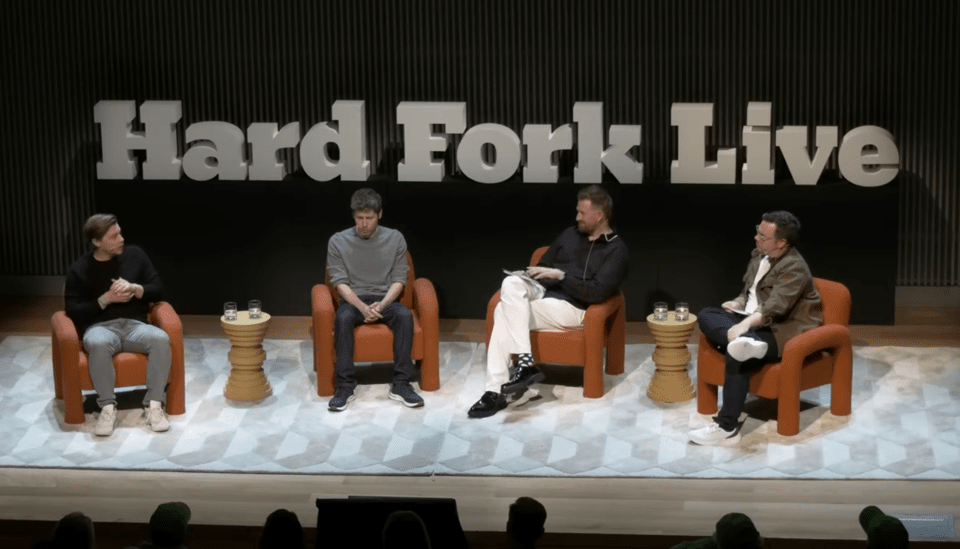

Altman attended a live interview on the podcast ‘Hard Fork’ held recently in San Francisco, where he said, “It is true that the hallucination rate of GPT-4o is slightly higher than GPT-4’s. However, we will greatly improve this in the next version.” He explained that it is still the early stage of aligning the inference model and learning how users utilize it, adding, “We have learned a lot now, and user satisfaction will increase with the next generation model.”

Hallucination refers to an error when generative AI creates nonexistent information as if it is real, raising continued concerns about its severity. The interviewer pointed out that GPT-4o produces false information more frequently than before and sometimes slyly presents bizarre claims.

The discussion also continued about the psychological impacts of AI on users. The interviewer mentioned that recently, some users of GPT-4o have reported increased cases of falling into conspiratorial thinking or experiencing mystical experiences, causing unstable mental states. Altman responded, “OpenAI recognizes this issue very seriously and is striving not to be late in responding, as past tech companies have erred.”

To mitigate this, OpenAI is implementing various measures. If a user is judged to be in crisis, expert help is recommended, and if conversations flow negatively, they are interrupted or redirected. There’s also a warning set to alert users against over-dependence on AI, which users can change in individual settings. However, Altman admitted, “We have yet to resolve how to effectively communicate such warnings to users who are extremely psychologically vulnerable.”

Even while acknowledging AI’s side effects, Altman emphasized that there are many positive use cases. He said, “There are cases where AI has actually helped restore marriages or improve family communication,” assessing that “AI technology is thoroughly net positive.” He shared an anecdote about a man he met in Costa Rica who restored his marriage thanks to ChatGPT, expressing expectations for AI’s role in restoring human relationships.

This interview is noteworthy as it comprehensively addressed the hallucination, privacy, and mental health issues emerging with the advancement of AI technology. Altman emphasized, “OpenAI will not only develop technology but will also consider the social responsibility surrounding it.”