Google has unveiled ‘Gemma 3n’, an ultra-lightweight artificial intelligence (AI) model that can run on consumer devices like smartphones, laptops, and tablets. This model can operate with only 2GB of memory without the need for a separate cloud connection, attracting attention as a technology that could accelerate the popularization of AI technology.

‘Gemma 3n’ was announced at Google’s annual developer event, Google I/O 2025. It features multi-modal capabilities, allowing it to process not only text but also images and audio and, in the future, video. Its most notable feature is its ability to run even on devices with the specifications of a smartphone.

This model applies Google’s DeepMind ‘Per-Layer Embedding’ technology, which dramatically reduces the memory usage required by large-scale models. As a result, it can operate with only 23GB of memory while housing 500 million to 800 million AI parameters.

Additionally, ‘Gemma 3n’ uses a ‘MatFormer’ architecture, which embeds small models within a single model. This allows only smaller models to be executed depending on the situation, ensuring both energy efficiency and response speed.

Thanks to the minimization of resources needed to execute AI, most functions can operate without the high-performance graphics card (GPU) typically required by large AI models. For instance, one of the text-based models can run with just 861MB, which is about the level of general PC memory.

‘Gemma 3n’ supports various functions including image recognition, real-time voice recognition, language translation, and audio analysis, all designed to be processed on the device. Google expects this model to complement the limitations of existing cloud-based AI by enhancing privacy and providing immediate response times.

Moreover, the ability to process multiple languages, including Korean, has been strengthened. Image interpretation performance has also improved, enabling the processing of visual information with resolutions ranging from 256×256 to a maximum of 768×768.

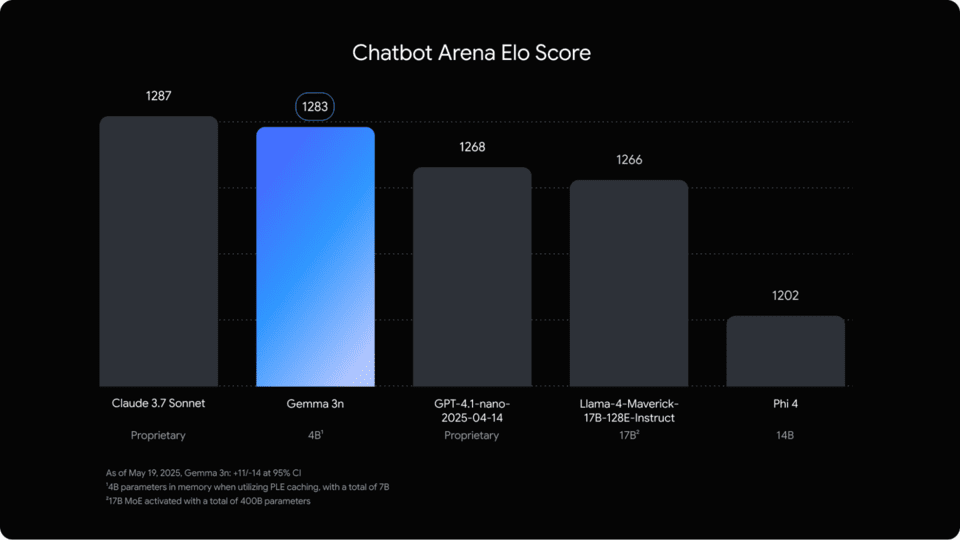

Developers can currently pre-experience ‘Gemma 3n’ through Google’s AI development tools. Google plans to integrate this model into its operating systems, Android and Chrome platforms, in the future. Gemma 3n has ranked among the top in user preference (Elo score) among numerous models, both open and closed, acknowledging its performance.

This announcement is seen as marking the beginning of an era in which AI functions can operate directly on everyday smart devices without relying on cloud servers.