U.S. AI company Anthropic is reportedly expanding its developer tool ‘Workbench’ function of its language model ‘Claude.’ Claude Workbench is a tool designed for developers to easily perform testing and setup using AI APIs.

This functionality expansion was recently confirmed through TestingCatalog, which specializes in new AI functions and beta testing information. TestingCatalog is known for exploring the latest changes related to generative AIs, including Claude, ChatGPT, and Gemini, and regularly monitoring developer-specific functions.

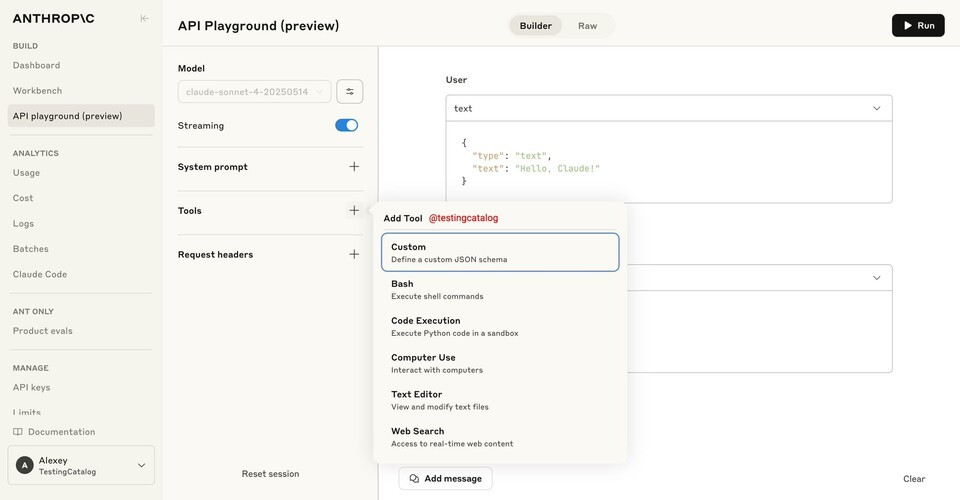

TestingCatalog has indicated that a new feature called ‘API Playground’ has been added to Workbench. This feature is structured to allow experimentation with various settings of the Claude API without writing any code. Users can directly adjust response temperature, cache settings, and custom headers from a web interface.

For developers or product teams preparing for API integration, this provides high utility as it allows for easy execution of repetitive testing and parameter adjustment processes. However, it has not yet been officially released and is only partially activated in a ‘preview’ state.

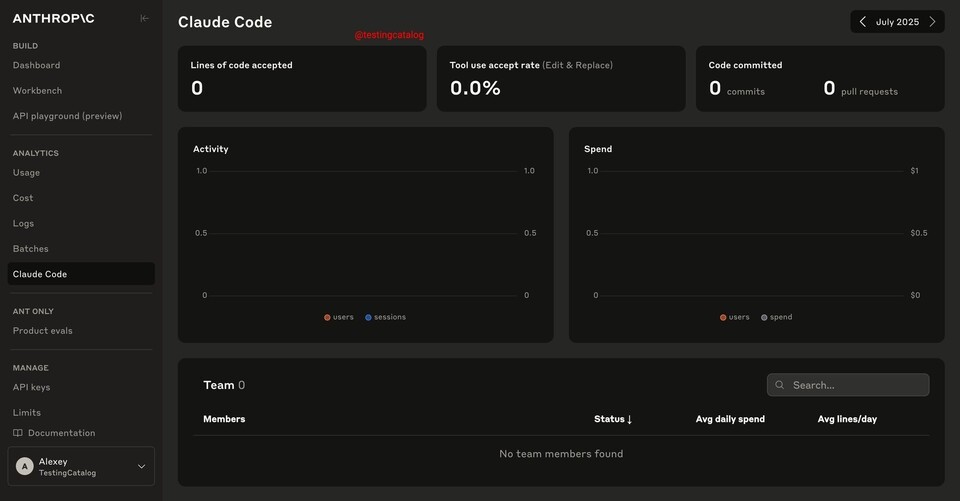

Within the ‘Analytics’ tab of Workbench, there is also a function being tested to visually confirm the activity of the Claude Code utility. Claude Code is a tool that generates code in a command-line environment and allows direct commits to a version control system.

The newly included analytics function in Workbench serves to show how much Claude Code contributes to a project, such as through the number of commits. This could serve as a criterion for companies or development organizations to objectively measure the amount of code AI has contributed to actual projects. However, specific metrics or criteria for analysis have yet to be disclosed.

Additionally, TestingCatalog confirmed that a section named ‘Product Evals’ has been added to Workbench. Currently marked as ‘coming soon,’ the specific contents of the function have not been revealed. However, based on the name, it is likely to include experimental tools such as prompt response quality assessment or comparative testing between models.

This expansion of functions in Claude Workbench is currently being tested only in a closed build in the U.S. and a few limited regions and has not yet been made available to the general public, including users in Korea. Anthropic has not released an official statement on the official release schedule or regional introduction plans for these functions.

This expansion is interpreted as a trend toward extending AI tools from chatbot features to practical development and operational environments. Similar to how OpenAI gradually opened various developer functions for ChatGPT, Anthropic is also reorganizing the Claude ecosystem as a tool optimized for technology-based organizations.

If Workbench functionality is officially released, Claude is expected to function as a comprehensive tool that can be used not only as a simple conversational AI but also throughout the entire process of actual work, code writing, and product development.