“Whispering, laughing, and even changing intonations”

Creating AI voice acting with prompts

From AI reading text to AI performing acting

Voice generation technology has completely changed. The voice AI company, ElevenLabs, recently released a speech synthesis model capable of expressing emotions, ‘Eleven v3 Alpha’. This model offers functions that go beyond simple reading, allowing for free control over tone, emotion, and sound effects.

To use it properly, the method of writing a ‘prompt’ is important. A prompt is a sentence to be conveyed to the AI. The voice result of the AI can vary significantly depending on how and what sentence is delivered.

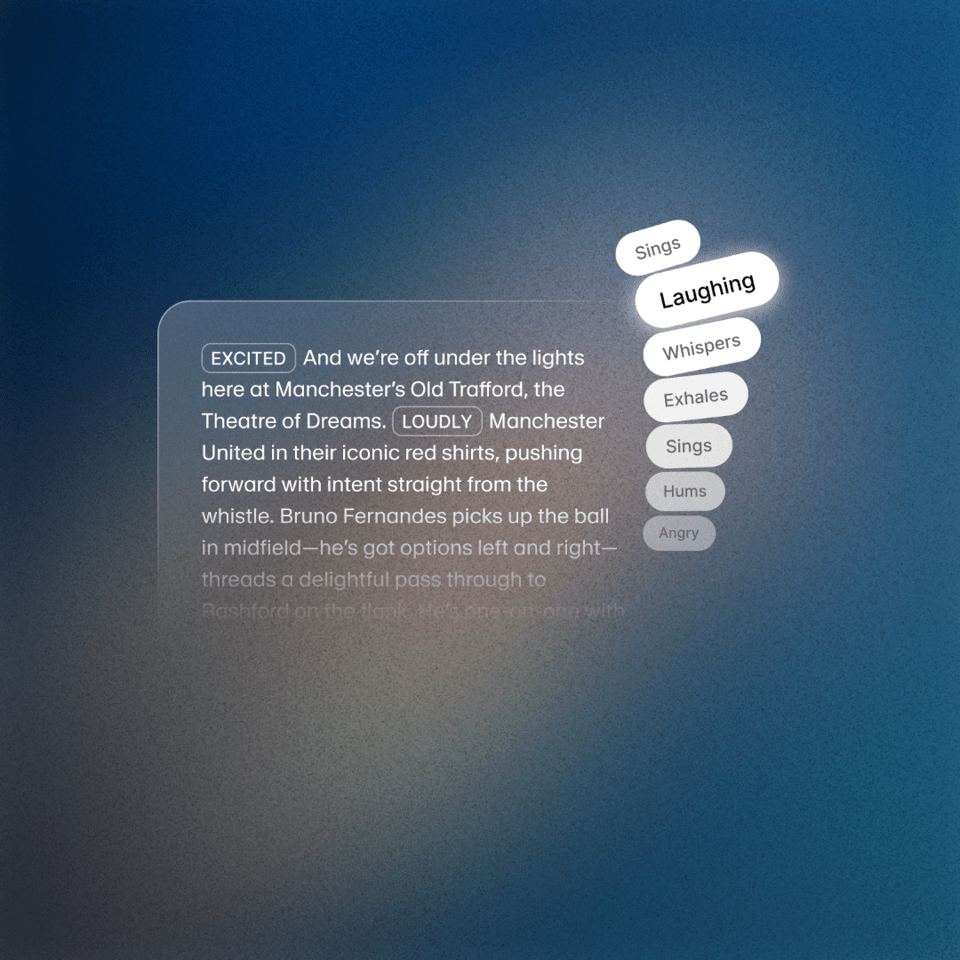

Eleven v3 uses a feature called ‘voice tags’. For instance, if you put expressions like [whispering], [laughing], or [in a sad tone] at the beginning of a sentence, the AI will read it in an actual whispering or laughing tone. For example, “[whispering] Don’t tell anyone today.” Instructions of this kind can be put inside square brackets [ ].

Moreover, you can also insert sound effects like [applause] or [door opening]. You can change emotions or effects in the middle of a sentence naturally, like acting. Even within a single sentence, it can shift from joy to sadness, and back to a calm tone.

AI can also create dialogues with more than one speaker. After composing it in conversational format, you can set different voices for each speaker and add emotion tags before each speaker’s line to create an effect as if actual people are conversing.

When writing sentences, it’s best to use natural speech patterns. Periods, commas, and line breaks are also important. To emphasize a word, using capital letters or emotion tags can be effective. Very short sentences can result in unstable outcomes, so it’s best to keep sentence length above 250 characters.

“[laughing] Today was really a fun day! [whispering] But, it’s a secret to you.”

For example, if written like this, the AI reads it while laughing and suddenly shifts to a whispering tone, expressing the flow of emotion as if an actual actor is performing.

ElevenLabs explains that the v3 model can be used to create AI that speaks like a human for applications such as audiobooks, animated characters, customer support, and educational content. Especially in Korean, the support has been enhanced for emotion expression, intonation control, and even dialect speech, increasing its potential for use.

The existing TTS (text-to-speech) technology simply provided a function to read text aloud, but Eleven v3 is more like a tool that allows creators to even direct performances. Speaking AI, AI that makes listeners feel emotions—that central point is a well-written prompt.