Google DeepMind has unveiled the next-generation AI agent, SIMA 2, accelerating the competition in general AI technology.

SIMA 2 integrates Gemini, expanding its understanding and reasoning abilities in various environments, and significantly enhances its capability to perform complex tasks. The research team explained that SIMA 2 has a structure that allows it to improve performance by learning from its experiences.

Google DeepMind first introduced SIMA 1 in March last year. At the time, SIMA 1 was trained on diverse 3D game data to follow instructions in various virtual environments. However, the completion rate of complex tasks was only 31%, highlighting its limitations. In response, DeepMind embarked on the development of SIMA 2 to address these issues.

Joe Marino, a senior researcher at DeepMind, explained that the performance of SIMA 2 is double that of SIMA 1. He noted that SIMA 2 completes complex tasks even in previously unseen environments.

Marino emphasized that the most significant change in SIMA 2 is its ability to perform complex tasks even in unfamiliar environments. He explained that SIMA 2’s self-improvement structure, which enhances capabilities based on experience, can be seen as a step forward in general AI research.

DeepMind highlighted the concept of an Embodied Agent. The term “embodied” means “implemented in a state with a body.” An Embodied Agent performs tasks while directly observing and interacting with the environment, like a robot. In contrast, a non-embodied agent operates within a screen, managing tasks like scheduling or memo management. According to the research team, the ability of an Embodied Agent to work in various environments is a key concept in general AI research.

Jane Wang, a senior researcher at DeepMind, stated that SIMA 2’s improvements extend beyond mere gaming capabilities. She explained that SIMA 2 is designed to understand phenomena and handle user requests in a commonsense manner.

During a demonstration, DeepMind showcased SIMA 2’s behavioral approach. In ‘No Man’s Sky,’ SIMA 2 described the surrounding environment and recognized a distress beacon, determining its path accordingly. The research team noted, “The agent observed the environment and decided the next action on its own.”

SIMA 2 combines language with color and object information to draw inferences. The research team demonstrated a command to “move to the house with the ripe tomato color.” SIMA 2 linked the color of tomatoes to ‘red’ and selected the target accordingly. The design revealed the internal inference process clearly.

SIMA 2 also executed emoji-based commands. Marino explained, “When the emojis for an axe () and a tree () were input, SIMA 2 performed the task of chopping the tree.”

DeepMind further reported that in the photo-like virtual world created by the generative model Jeanie, SIMA 2 identified and interacted with objects such as benches, trees, and butterflies.

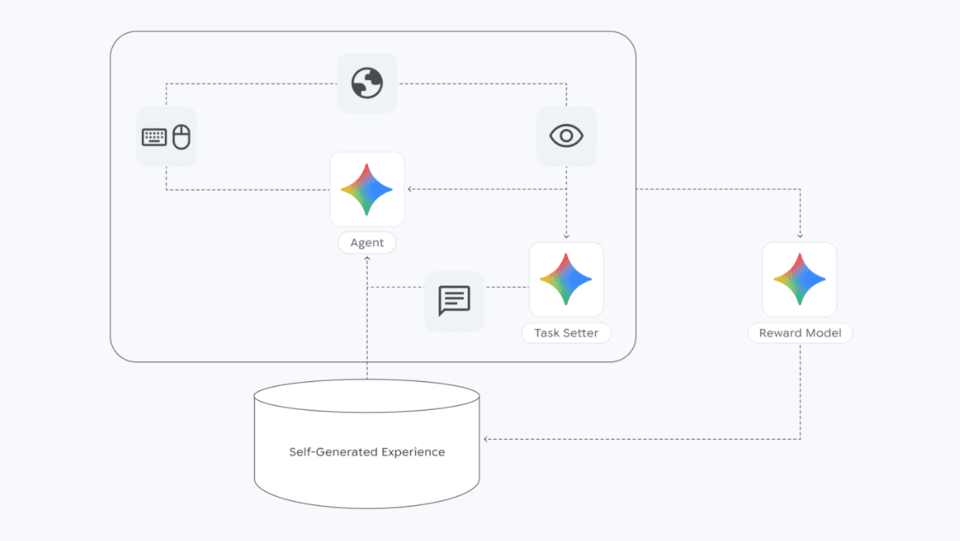

Shifting from a dependence on human data, SIMA 2 emphasizes self-learning. Unlike SIMA 1, which relied on human data, SIMA 2 is structured for self-learning. Initially trained with human play data, SIMA 2 then generates new tasks in new environments based on Gemini. A reward model evaluates its actions, and the agent learns from this feedback to improve its performance.

DeepMind explained that this approach allows the agent to expand its abilities through trial and error, moving away from a setup where humans need to provide all the data. The autonomy in learning is a meaningful shift.

Frederick Bes, a senior research engineer at DeepMind, stated that SIMA 2 is closer to the high-level decision-making and task comprehension required for actual robotics work. He explained that, for a robot to move to a specific place, it first needs to understand concepts of targets and spatial information, which SIMA 2 addresses.

DeepMind has not yet set a date for applying SIMA 2 in actual robotic systems. The company is developing a separate robot-based model. DeepMind announced that the preview release of SIMA 2 aims to assess potential usability and collaborative feasibility.