In the era where artificial intelligence (AI) is gradually replacing human judgment, Sony AI, a research institute of Japan’s Sony Group, introduces a new standard to tackle AI’s bias problems. AI is increasingly embedded in daily life with applications ranging from facial recognition in smartphone cameras to autonomous vehicles, medical diagnostics, and hiring assessments. However, AI judgments are not always fair, often due to ‘data bias’ that developers may not even be aware of, resulting in outcomes unfavorable to certain genders, races, or age groups.

The root cause of this issue lies in ‘data,’ not technology. AI learns about the world from training data. If the data is skewed or collected without consent, the results are inevitably distorted. To resolve this, Sony AI officially released the world’s first ‘Fair Human-Centric Image Benchmark’ (FHIBE) on the 5th of November (local time). FHIBE is a new standard designed to check and improve discriminatory errors that occur during AI’s facial or body recognition processes.

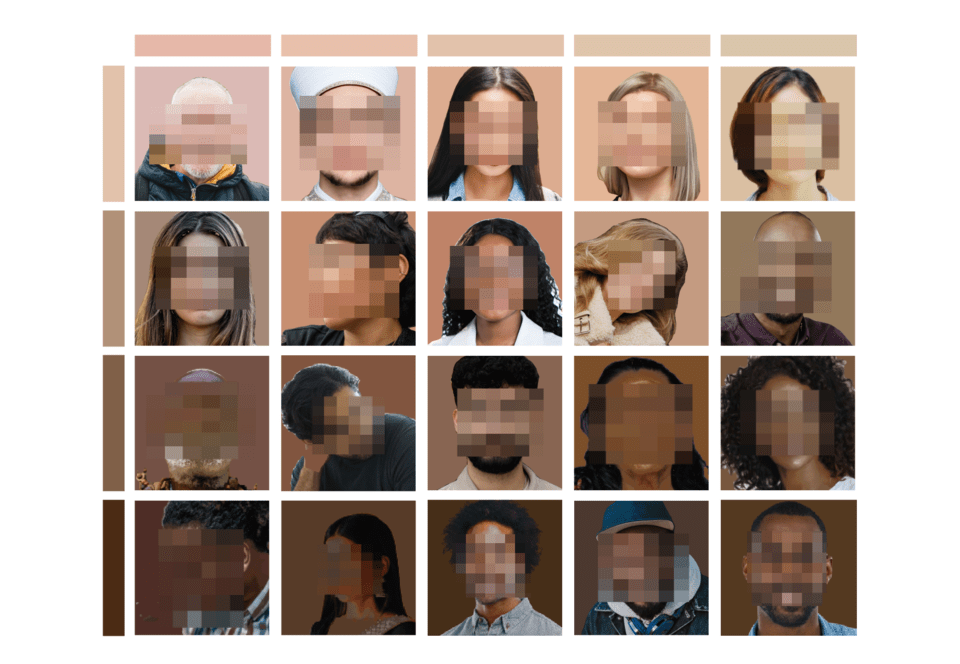

FHIBE is more strictly ethical than any other previously released dataset. All photos were taken with clear consent from participants. A total of 1,981 participants from 81 countries provided 10,318 images, representing diverse backgrounds in terms of race, gender, age, hair style, skin tone, and culture.

Sony AI went beyond simply gathering facial photos. Each image includes meticulously recorded conditions like shooting environment, lighting, and camera settings. Researchers can use this data to precisely analyze AI models’ bias under specific conditions, such as failing to recognize certain skin tones in low light or recognizing people with short hair more accurately than those with long hair.

The development of FHIBE was published in the scientific journal ‘Nature,’ highlighting its capacity for much more detailed and empirical analysis than existing bias verification datasets.

Alice Xiang, head of AI governance at Sony Group and a key researcher in AI ethics at Sony AI, described this project as a “turning point in AI ethics.” She noted that “the AI industry has long used non-consented, non-diverse data, which has led to AI replicating or exacerbating societal inequalities.” She emphasized that “FHIBE is proof that responsible data collection is indeed possible” and highlighted the inclusion of ethical principles such as informed consent, privacy protection, fair compensation, safety, and diversity.

Michael Spranger, CEO of Sony AI, stated, “To become a tool that expands human imagination, AI technology must first earn trust. FHIBE represents the first step in developing AI based on fairness and transparency.”

In the study using FHIBE, Sony AI researchers found that certain AI models had lower accuracy when recognizing individuals using ‘She/Her/Hers’ pronouns. Analyzing the cause revealed that hair style diversity significantly impacted recognition accuracy, showcasing an element rarely addressed in previous fairness research.

Moreover, when AI was asked neutral questions like “What is this person’s occupation?” some models associated certain races or genders with criminal activities or low social status—a subtle bias that FHIBE is designed to diagnose.

Unlike traditional datasets aiming only to improve accuracy, FHIBE is also a tool for examining AI’s ‘gaze’ at humans, thereby holding profound significance.

FHIBE adheres to strict ethical standards not just in collection but also in management. Participants can delete their data at any time, and deleted images are replaced with new data wherever possible. This framework ensures data providers have complete control over their rights.

Developed over three years by Sony AI researchers, engineers, and legal, IT, and QA experts worldwide, the data collection process underwent legal verification, ensuring systems for privacy protection and fair compensation were in place.

Sony AI promises ongoing updates to prevent the dataset from becoming stagnant over time, aligning fairness standards with AI model developments.

AI fairness and ethics have become essential standards for tech companies, driven by widespread AI use in hiring, crime prediction, medical diagnostics, and more. As many companies previously focused solely on improving AI performance, Sony AI has elevated ‘fairness’ and ‘transparency’ to core technological values. FHIBE serves as a symbol of the direction the AI industry must take.

Sony AI asserts that “AI should expand human imagination and creativity, but it must always start with looking at people fairly through data.” The release of FHIBE is not just about a dataset but a declaration proposing an ‘AI ethics’ solution for the entire industry. If ethics infuse every stage from data collection to management and application, AI can truly become a technology that expands human imagination rather than perpetuating prejudice.