How can you create an AI image that looks like a photograph?

Google’s newly released Gemini 2.5 Flash Image, also known as ‘Nano Banana’, provides an answer to this question. Now, you can create high-quality images with just a few lines of text without needing professional editing tools. However, the completeness of the result varies dramatically depending on the prompts you input. Google’s own prompt writing guide helps even beginners handle images as if they were experts.

The prompt is a command given to artificial intelligence. It’s a sentence describing the image desired by the person, which helps the AI understand and render it into a picture. If you simply input “cat picture,” a generic cat image will appear, but if you describe “a gray cat sitting by the window basking in warm sunlight, with a pot next to it, and framed as if shot with a 50mm lens,” you will achieve a much more realistic and detailed outcome.

Google emphasizes that the main strength of Gemini lies in its deep language understanding. Therefore, crafting scenes in sentences rather than listing keywords yields better results. Simply put, a prompt is closer to “a language for conversing with AI” rather than just a mere search term.

The more precise the prompt, the higher the quality of the outcome, while vague prompts result in unpredictable results. For this reason, experts say, “Half of a good image comes from a good prompt.”

If you want realistic images, utilizing camera terminology is most effective. By specifically describing angles, lens choices, and lighting conditions, the model reproduces scenes as if they were actual photos. For instance, inputting “a close-up of a potter shot with an 85mm portrait lens in a sunlit studio” results in a much more realistic and rich output compared to simply saying “potter photo.”

Gemini excels in simple graphics like stickers and icons, but styles must be clearly articulated in such cases. Specifying conditions like “cute red panda sticker, bold outlines, cel shading, transparent background” delivers desired results.

In logo creation involving text, accuracy is more crucial. Directions should include phrases, fonts, color schemes, and icon elements. For example, writing “’The Daily Grind’ logo in sans-serif font, black and white, with a coffee bean icon” can yield a high-quality outcome.

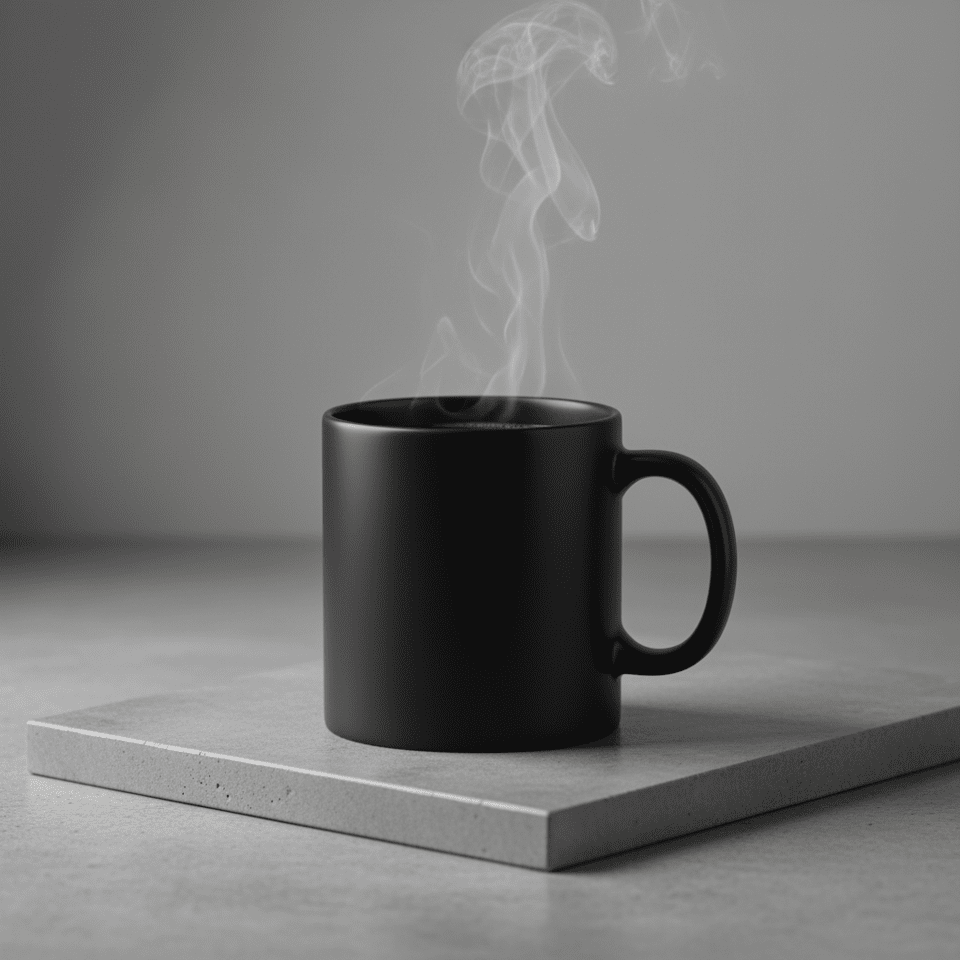

For product images used in e-commerce, it is important to input details as if describing a real shooting environment. By specifying lighting setups, camera angles, and focus areas, images close to those from a professional studio can be obtained. The instruction “black ceramic mug, three-point softbox lighting, 45-degree angle, focus on coffee steam” is a good example.

When a simple and neat composition is needed for backgrounds of websites, presentations, or marketing materials, leaving ample white space is effective. This not only enhances the subject in the picture but also provides space for inserting text.

For instance, if you input a prompt like “a single delicate red maple leaf positioned in the bottom-right of a wide cream background,” the focus will be on the maple leaf, while the wide background will create a simple image suitable for adding titles or phrases.

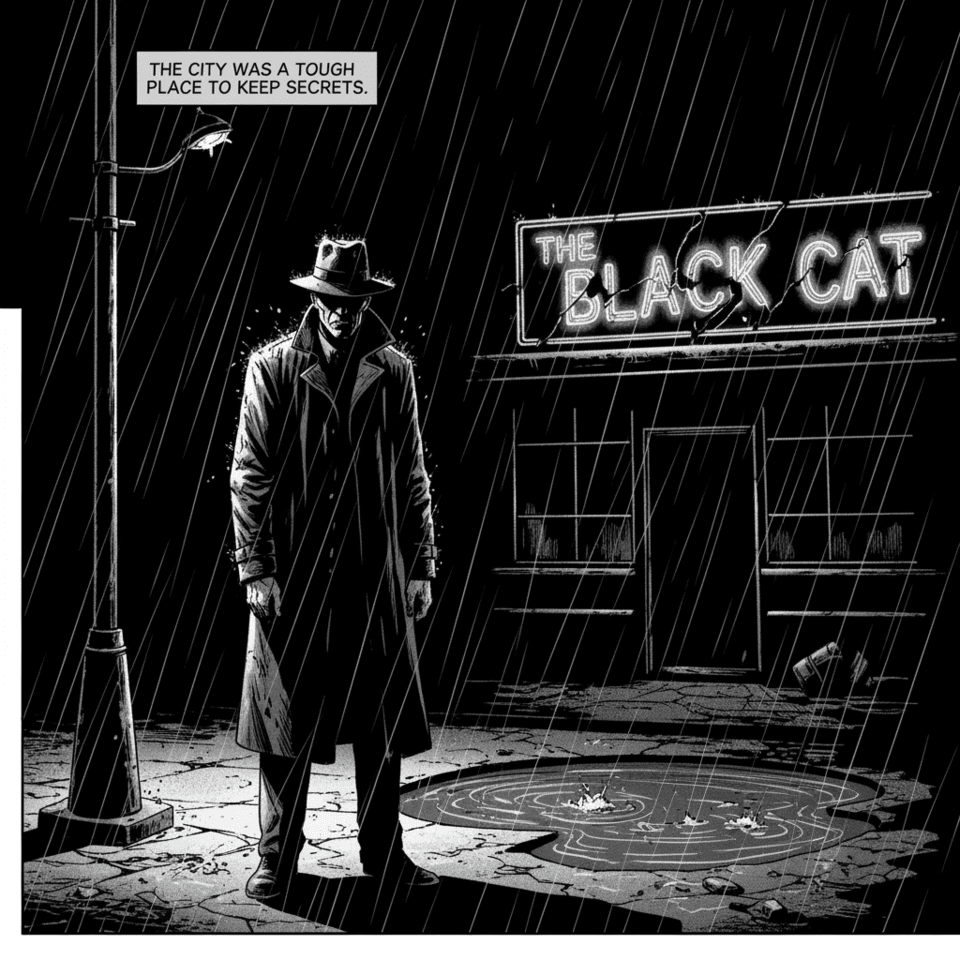

When creating continuous scenes like in comics or storyboards, it is necessary to provide specific instructions about the character’s appearance, actions, background, and dialogues. For example, entering “a detective in a trench coat standing under a streetlamp in the rain, with neon signs reflecting on the wet road. The top caption reads ‘The city was a tough place to keep secrets’” helps explain the scene smoothly and aids in storytelling.

Gemini also supports image editing. It is capable of adding or removing specific elements, modifying only parts through inpainting, transferring styles, and even combining multiple images. Maintaining essential features like faces or logos while changing only the background can also be achieved with a single prompt line.

Google emphasizes that “the more specific the prompt, the more refined the result.” By explaining context and intent together, the model’s understanding increases, and through repetitive instructions, results can be refined towards the desired direction. It is also recommended to describe in positive terms like “empty street” rather than negative expressions like “no cars.”

AI image generation is no longer exclusive to experts. However, achieving good results is determined by how you describe it. Now, the user’s language dictates the quality of the work.