87.4% of the public say “My personal information is important.”

Commissioner for Personal Information Protection urgently needs policy tailored to the AI era.

As the scope of Artificial Intelligence (AI) usage in real life expands, protecting personal information has emerged as a significant issue. ⓒSolution News DB

The Personal Information Protection Committee (headed by Hak-soo Koh, referred to as the Personal Information Committee) conducted a ‘Public Awareness Survey on Personal Information Protection Policy’ targeting 1,500 adults nationwide in celebration of its 5th anniversary. The survey was conducted online over eight days from June 9 to 16, 2025.

This survey comprehensively covered the public’s overall awareness of personal information, an assessment of the Personal Information Committee’s policies, and policy directions to be pursued in the future.

According to the survey results, 87.4% of respondents said their personal information is important, and 92.4% recognized the protection of personal information itself as very important. This means that personal information is considered a core element that protects everyday life and rights.

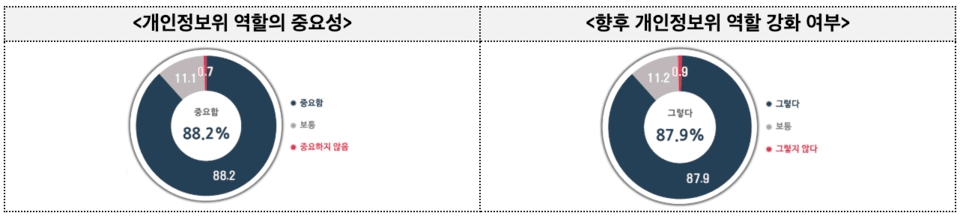

This awareness extended to recognition of the Personal Information Committee. The percentage of those aware of its existence was 50.9%, more than doubling from its initial recognition rate of 23.6% in 2021. The percentage of those who felt the role of the Personal Information Committee is important was 88.2%, and 87.9% agreed that its role should be further strengthened.

The most effective policy is ‘Strengthening investigations and penalties’… The public values practical effectiveness.

Among the top ten policies promoted by the Personal Information Committee, ‘Strengthening investigations and penalties through strict law enforcement’ was deemed the most effective policy, with 77.3% of respondents evaluating it as very effective or effective.

This was followed by ‘Rationalizing the investigation and penalty system through legal amendments’ (73.9%), ‘Introducing the right to be forgotten for children and adolescents’ (73.6%), and ‘Operating personal information handling policies’ (70.3%). All top eight policies received positive evaluations of over 65%.

The public places high value on substantive execution through laws and systems. The perception is that concrete actions such as investigations and penalties, rather than mere declarations or recommendations, must accompany policies to feel their effect.

The changing personal information protection environment by AI… The public demands ‘policies in response to technology.’

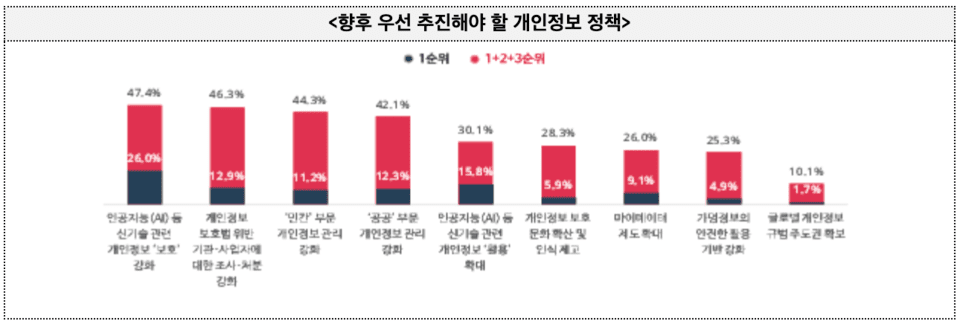

The top priority policy to be promoted in the future was ‘Strengthen personal information protection related to AI and other new technologies,’ selected by 26% of respondents. It was followed by ‘Expanding the use of personal information related to new technologies’ (15.8%), ‘Strengthening investigations and penalties for violations of personal information protection laws’ (12.9%), and ‘Enhancing personal information management in the public and private sectors’ (12.3% and 11.2%, respectively).

The public is concerned about how personal information is collected and used amid the development of AI technology, and demands fitting protective measures. The clear recognition is that it is difficult to respond with existing systems alone.

In the AI environment, where data flow is complex and difficult to predict, a system is needed to ensure the control of the information subject in advance. This is interpreted as a demand for a policy direction that enables data utilization and protection to blend without conflict.

Solution: Establishing evaluation criteria for technology and overhauling data governance.

The current protection system focuses on traditional personal information processing structures. However, AI technology collects data in an unstructured manner and can generate new information through self-learning, making management difficult with current legislation alone.

Performance evaluation criteria differentiated by technology type should be established. For example, items such as the mathematical interpretation ability and comprehension of long texts by Large Language Models (LLM) require different evaluation methods than general data standards. The performance evaluation dataset construction project currently being prepared by the Personal Information Committee is notable as the first attempt to respond to these differences.

The data governance system also needs reorganization. Information subjects should be able to verify when, where, and how their information is used, requiring an integrated management system. The MyData system or pseudonym information-based operation could be tools to enhance effectiveness from this perspective.

Effective law enforcement should also be supplemented. For personal information infringement cases that misuse new technology, swift investigations and accountability must be conducted, and technology-specialized personnel for investigation and disposal should be strengthened.

As AI technology advances, the sensitivity and potential utilization of personal information grow together. The public does not deny the advancement of technology itself. Rather, there is a clear demand for explanation and measures on under what principles the technology is utilized and how those principles can protect individual rights.